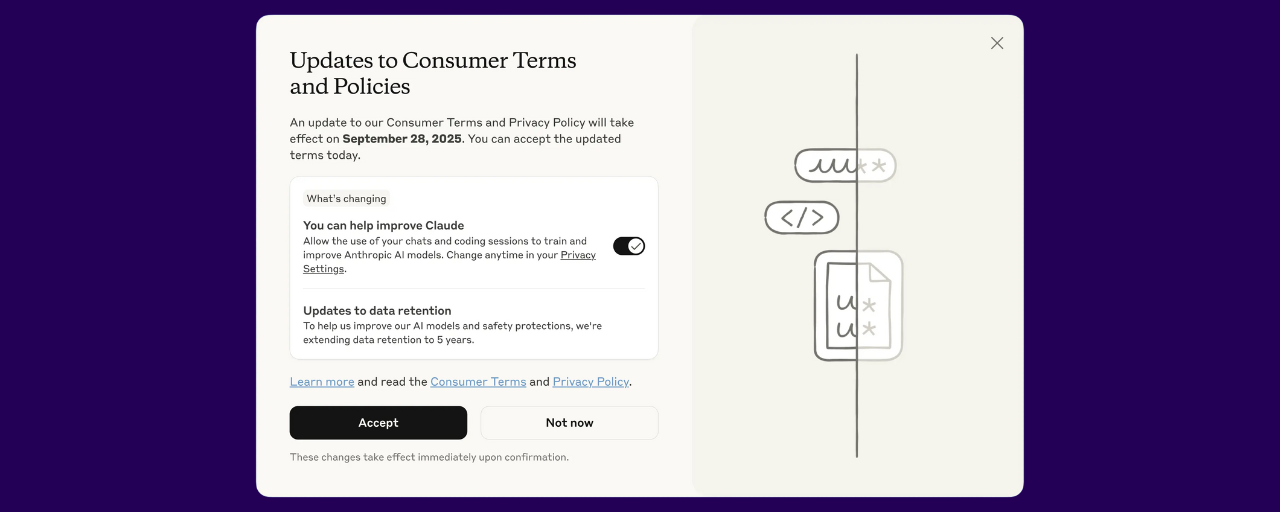

Anthropic recently updated their consumer terms and privacy policy this past September 28th for Claude, and there are a couple important things you should know about if you have a Claude account.

This update applies if you're using the free account, pro account, or max plans. If you're using the API through their commercial agreement, this doesn't affect you - you're covered under different terms.

Here's what changed: with your permission, they now use your chats and coding sessions to train and improve their AI models.

Essentially, you're giving them permission to use anything you put in the chats or through your coding sessions with Claude, whether that's through Cursor, Claude Code, or any of the other AI coding tools out there. They also retain this data for up to 5 years if you give them permission.

The important thing to understand is that this is currently enabled by default. If you're working with sensitive code, client data, or anything you don't want potentially used for training AI models, you can opt out.

If you're using Claude in your business, you should check with your team or legal department about whether this affects your data handling policies. And if you're working with client data, definitely make sure you understand what this means for your privacy obligations.

The good news is that Anthropic is being transparent about this and giving you control over the setting, but you have to actually go in and make that choice if you want to opt out.

Anthropic recently updated their consumer terms and privacy policy this past September 28th for Claude, and there are a couple important things you should know about if you have a Claude account.

This update applies if you're using the free account, pro account, or max plans. If you're using the API through their commercial agreement, this doesn't affect you - you're covered under different terms.

Here's what changed: with your permission, they now use your chats and coding sessions to train and improve their AI models.

Essentially, you're giving them permission to use anything you put in the chats or through your coding sessions with Claude, whether that's through Cursor, Claude Code, or any of the other AI coding tools out there. They also retain this data for up to 5 years if you give them permission.

The important thing to understand is that this is currently enabled by default. If you're working with sensitive code, client data, or anything you don't want potentially used for training AI models, you can opt out.

If you're using Claude in your business, you should check with your team or legal department about whether this affects your data handling policies. And if you're working with client data, definitely make sure you understand what this means for your privacy obligations.

The good news is that Anthropic is being transparent about this and giving you control over the setting, but you have to actually go in and make that choice if you want to opt out.